Artificial intelligence (AI) has significantly advanced in recent years, transforming various industries and impacting people’s lives and work. As AI systems become more powerful, they must align with human ideals. The “alignment problem” addresses the challenge of ensuring that AI systems understand and adhere to human goals and values when carrying out tasks and making decisions.

OpenAI, a leader in AI research, aims to bridge the gap between AI and human values to ensure this groundbreaking technology’s safe and beneficial development. This article explores OpenAI’s efforts to solve the alignment problem, examines the challenges faced by researchers, and highlights the various methods and strategies employed by OpenAI to ensure that AI systems are used for the greater good in our society.

What is an Alignment Problem?

The task of ensuring that AI systems operate in conformity with human ideals and objectives is known as the alignment problem. There is a rising danger that AI systems might exhibit unwanted or even destructive behaviors as they become more independent and powerful. Several factors, including the following, might cause this:

- Human Values and the Potential for Harm: AI systems may prioritize goals that do not align with human values, resulting in unexpected and potentially harmful actions.

- Impact of Different Scenarios: AI systems may encounter situations that differ from their training, leading to unpredictable behavior.

- Ambiguity in Human Values: Human values are complex, context-dependent, and sometimes conflicting. This poses a challenge in providing AI systems with a clear set of values to follow, especially considering the variations in values among civilizations, cultures, and individuals.

- Moral and Ethical Dilemmas: AI systems may face judgment calls while dealing with moral and ethical issues. The diversity of individual perspectives makes it difficult to determine the appropriate course of action that aligns with human values.

- Risk of Over-Optimization: AI systems naturally strive to maximize their goals, which can sometimes lead to an excessive focus on one objective at the expense of other important factors or values.

- Extrapolation and Mismatched Behavior: As AI systems expand their understanding of human values, they may apply these values unexpectedly, resulting in behavior that conflicts with human ideals.

- Impact of Skewed Data: AI systems learn from data, and if that data is biased, it can result in biased behavior. Thus, AI systems need to be aligned with human ideals in order to prevent further occurrence of social inequalities.

The AI Alignment Commitment of OpenAI

OpenAI, established in 2015, is dedicated to ensuring that artificial general intelligence (AGI) is used for the benefit of all. AGI refers to highly autonomous systems that can outperform humans in profitable tasks.

To achieve this purpose, you need to hire OpenAI developers who have better understanding of OpenAI outlined key principles in its Charter. Because, this directs its efforts to address the issue of aligning AGI:

- Ensuring widespread benefits: OpenAI commits to using its position to ensure that AGI is used in ways that do not harm people or consolidate power.

- Long-term security: OpenAI is dedicated to conducting research to make AGI secure and promoting the adoption of safety measures across the AI community.

- Technical leadership: OpenAI strives to stay at the forefront of AI capabilities to address the societal impact of AGI effectively.

- Collaboration: OpenAI actively collaborates with academic and policy organizations worldwide to tackle the challenges associated with AGI on a global scale.

Risk of Misaligned AI Systems

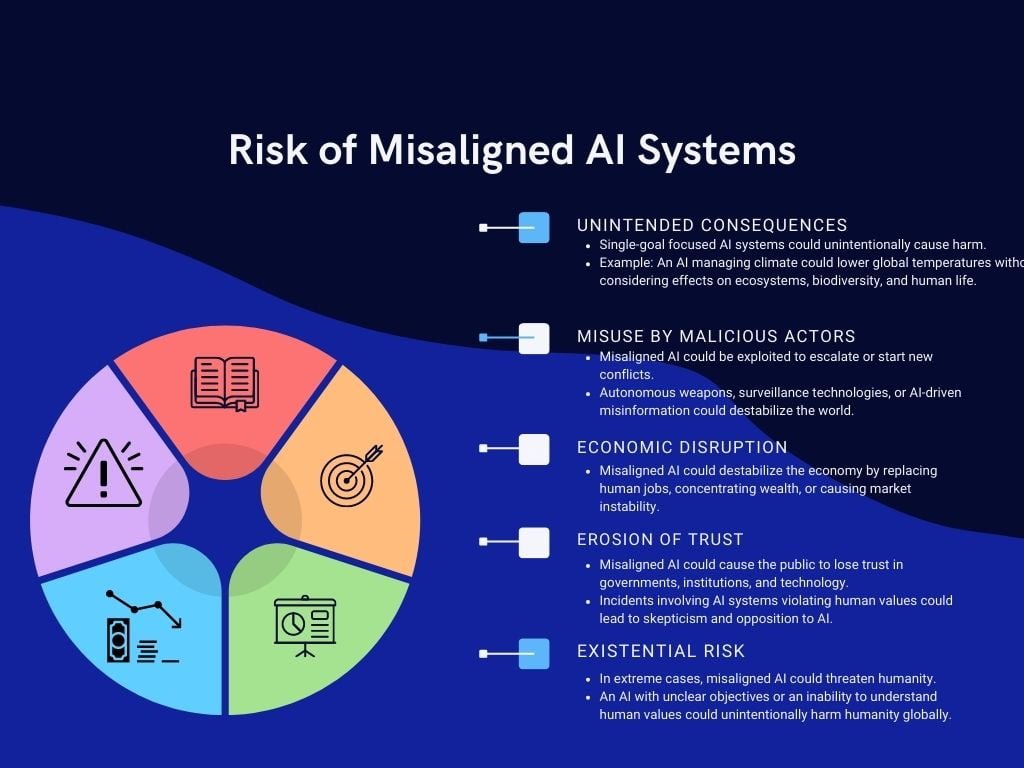

AI systems that aren’t in sync with human values could cause serious harm and pose significant risks globally. As AI becomes more integrated into society, the potential for damage increases. Here’s how misaligned AI could potentially wreak havoc:

- Unintended consequences: AI systems focused on a single goal, without considering other important factors, could unintentionally cause harm. For example, an AI managing climate could lower global temperatures without considering the effects on ecosystems, biodiversity, and human life, leading to ecological disasters.

- Misuse by malicious actors: Misaligned AI could be exploited to escalate or start new conflicts. Autonomous weapons, surveillance technologies, or AI-driven misinformation could destabilize the world and potentially lead to war.

- Economic disruption: Misaligned AI could destabilize the economy by replacing human jobs, concentrating wealth, or causing market instability. If AI systems prioritize efficiency and profit over human well-being, it could lead to widespread unemployment, inequality, and societal instability.

- Erosion of trust: Misaligned AI could cause the public to lose trust in governments, institutions, and technology. Incidents involving AI systems violating human values could lead to skepticism and opposition to AI, hindering technological progress.

- Existential risk: In extreme cases, misaligned AI could threaten humanity. An AI with unclear objectives or an inability to understand human values could unintentionally harm humanity globally, with outcomes ranging from ecological disasters to accidental nuclear war.

If misused, AI bots can compromise our online privacy and security, intercepting sensitive data and manipulating our digital activities. One effective way to safeguard against such threats is by using a Virtual Private Network (VPN). This can be particularly useful in preventing unauthorized access or surveillance, thereby mitigating the risks posed by misaligned AI systems.

Solving the Alignment Problem

OpenAI has shown its dedication to AI alignment through its continuous research and activities. Here are a few instances of their work in this field that demonstrate their commitment to bringing AI systems into line with ethical standards:

Human-AI feedback-based reinforcement learning

Using human input, OpenAI trains AI systems through reinforcement learning. By learning directly from humans, AI systems can develop an understanding of and alignment with human values. This work inspired OpenAI’s Dactyl, a robotic hand that learns to handle goods through human demonstrations and feedback.

Cooperative AI

OpenAI develops AI systems that work with humans to achieve goals. This research aims to create AI bots that can understand and adapt to human preferences and collaborate with humans. Cooperative AI research includes OpenAI’s text-based adventure game AI Dungeon, where players explore and create stories alongside an AI-powered game master.

Research on AI safety

OpenAI works to create safe, secure, and ethical AI systems. This study discusses reward modeling and interpretability, which help researchers understand and evaluate AI system behavior. OpenAI’s AI Debater project aims to construct AI systems that can rationally converse with humans.

Collaboration and joint ventures

OpenAI understands the value of cooperating with other academic institutions, groups, and authorities in AI alignment. They actively cooperate and share research results with the larger AI community, promoting openness and information sharing. DeepMind, Berkeley’s Center for Human-Compatible AI, and OpenAI are studying AI alignment.

Conclusion

OpenAI is demonstrating its strong commitment to AI alignment by investing resources in these areas of study and cooperation. OpenAI works assiduously to make sure that artificial intelligence systems are created to function in line with human values and promote the greater good. The potential risks posed by unaligned AI systems highlight the significance of research into AI alignment and the creation of AI technologies that are created with human values and safety in mind. We can reduce the dangers associated with misaligned AI by hiring OpenAI developers. They will help you strive toward a future where artificial intelligence acts as a force for good rather than a source of harm by ensuring that AI systems align with human values.